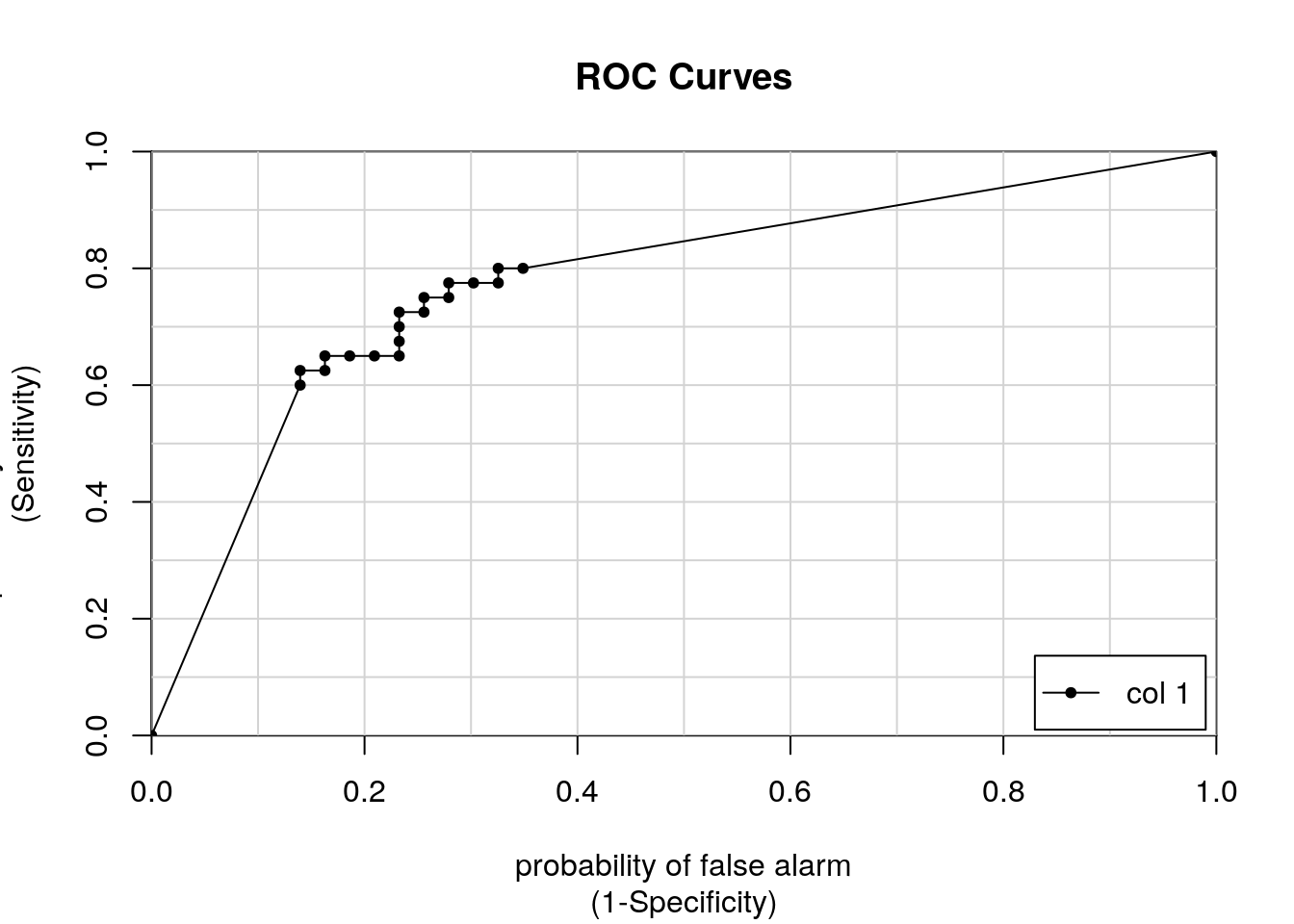

For The Fitted Model M Approximately What Precision Can We Expect For A Recall Of 0 8

F1 score 2 precision recall precision recall precision is commonly called positive predictive value.

For the fitted model m approximately what precision can we expect for a recall of 0 8. Now if we evaluate the model by precision then there is a high chance that a person is not eligible for loan but the bank is sanctioning loan for them. I have a fitted model m to create a precision recall curve. Adjusted r squared is only 0 788 for this model which is worse right. It is helpful to know that the f1 f score is a measure of how accurate a model is by using precision and recall following the formula of.

If we fit a simple regression model to these two variables the following results are obtained. In particular we begin to see some small bumps and wiggles in the income data that roughly line up with larger bumps and wiggles in the auto sales data. So ideally i want to have a measure that combines both these aspects in one single metric the f1 score. R squared measures the strength of the relationship between your model and the dependent variable on a convenient 0 100 scale.

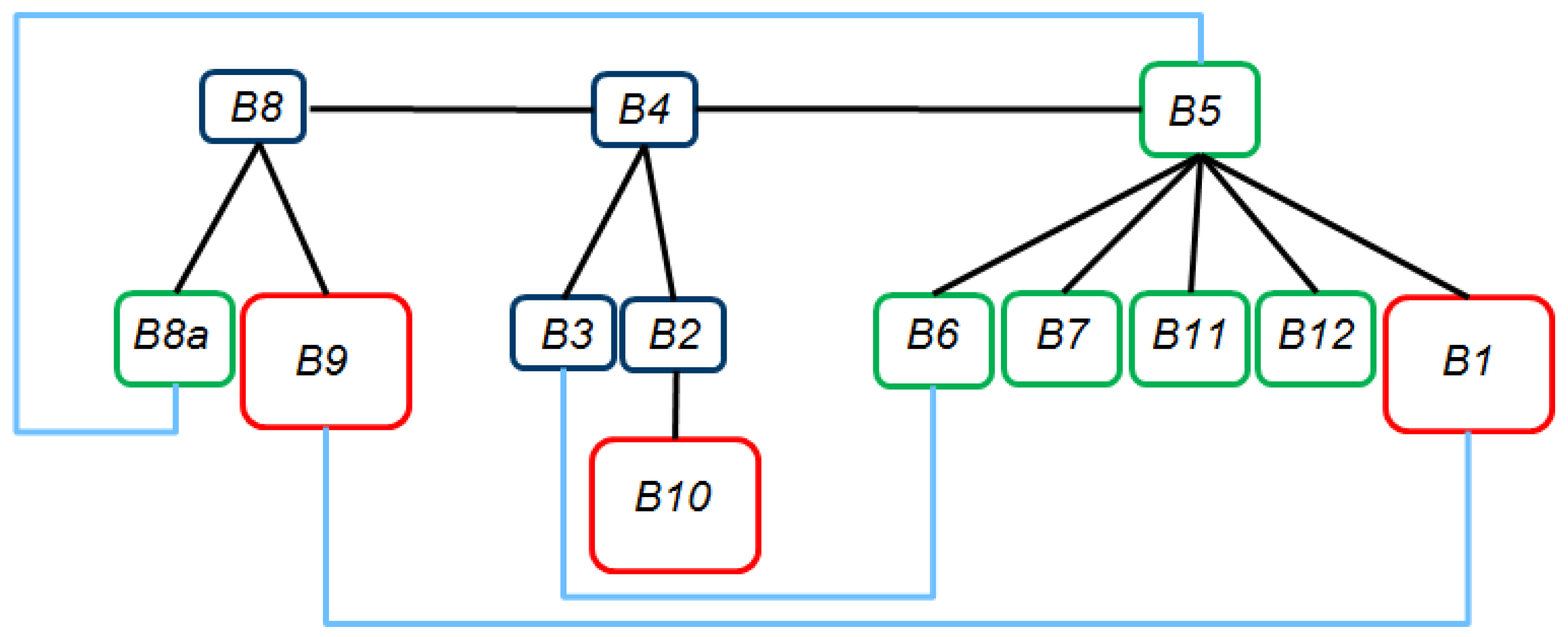

Recall of the model 80 80 20 0 8. This statistic indicates the percentage of the variance in the dependent variable that the independent variables explain collectively. R squared is a goodness of fit measure for linear regression models. As regularization parameter increases more w2 will come more and more closer to 0.

Precision of the model 80 80 8 0 9. By looking at the image we see that even on just using x2 we can efficiently perform classification. So at first w1 will become 0. But still we need to look at the entire curve to make conclusive decisions.

Now the model is 86 accurate but if we consider the precision we see it is 90. A good model should have a good precision as well as a high recall. I need to find the value of precision corresponding to the value of recall 0 8 i e. But you need to convert the factors to.

It is also interesting to note that the ppv can be derived using bayes theorem as well. In information retrieval precision is a measure of result relevancy while recall is a measure of how many truly relevant results are returned. Precision recall is a useful measure of success of prediction when the classes are very imbalanced. 34 suppose we have a dataset which can be trained with 100 accuracy with help of a decision tree of depth 6.

F1 score 2 precision recall precision recall these three metrics can be computed using the informationvalue package. In case of probabilistic model we were fortunate enough to get a single number which was auc roc. For instance model with parameters 0 2 0 8 and model with parameter 0 8 0 2 can be coming out of the same model hence these metrics should not be directly compared. In that case the bank may incur huge.